{{item.title}}

{{item.text}}

{{item.text}}

With generative artificial intelligence (AI) tools like ChatGPT well and truly becoming an ‘overnight’ sensation, many have been awakened to the potential for AI to revolutionise the way we do business. But the reality is that the use of AI technologies in everyday business functions is already commonplace. From Netflix using AI to recommend movies based on what we have previously watched, to the agricultural industry using AI for crop and soil management, or e-commerce product recommendation engines re-targeting consumers based on past purchases, AI is already disrupting traditional business.

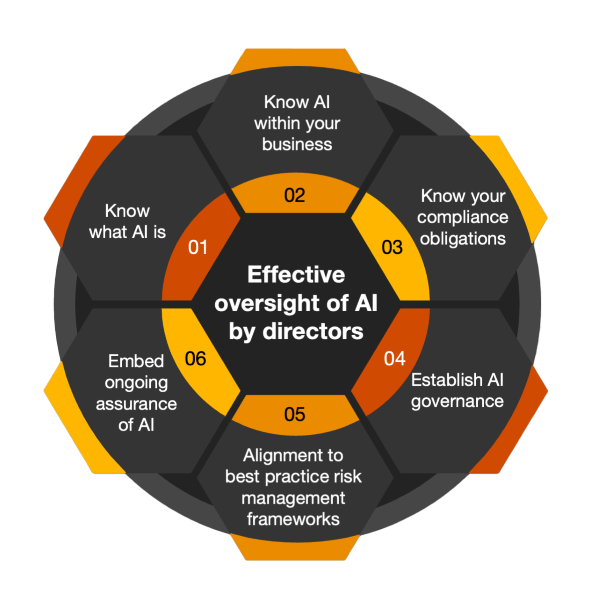

In light of the staggering increase in AI use, directors are under mounting pressure to ensure their organisations are prepared to use AI in a responsible manner. But what does this really mean in practice? New Zealand laws have yet to clearly define what AI is, let alone what a director’s duties are when it comes to AI. Although specific black letter law regulating AI has yet to be formalised, directors need to understand their role and responsibilities in the deployment of AI. In short, that means implementing AI governance.

AI is happening now, and directors should look to stay ahead of the curve. The choice is not between using AI and not using AI. Given its prevalence and trajectory, AI will either be used by an organisation governed or ungoverned – the choice is in the hands of the directors.

We unpack the relevance of directors’ duties in the context of AI and how directors can effectively manage these duties. AI, with all its promises and opportunities, comes with a range of known risks. Without appropriate organisational governance, there is a real possibility that AI becomes a source of harm and risk (and therefore, liability) to your business.

Given the increasing trend of using AI in the workplace, understanding the technology and its impact falls directly within the scope of a director’s obligation to act in good faith and in the best interests of the company.

Accordingly, directors must:

Despite a lack of explicit AI laws in New Zealand, legal obligations may arise from existing governing legislative instruments or regulations e.g. privacy, human rights, or anti-discrimination laws. Directors should be aware of how the use of AI in their organisations may contravene these laws, and ensure mitigating processes are put in place to manage compliance.

Directors must turn their minds to AI because it affects every aspect of their oversight duties. Directors and officers must consider how to manage the data, models and people involved in implementing AI. Critically, directors cannot determine risk effectively for their organisation in the modern world without dealing with the impact of AI.

Directors must consider the impact of the use of AI on society, people and organisations. Risks to an organisation can be commercial, regulatory and reputational in nature. In particular, consider the impact on your organisation’s key stakeholders such as your employees and customers. Consider also the risks of not adopting AI solutions.

Like any other business risk, AI is not a ‘set and forget’ obligation – routine assurance of AI systems, and the governance framework itself, is required to ensure compliance with regulations and best practice.

{{item.text}}

{{item.text}}